This ROS package provides a simulation of the picking challenge for the 1st year group project in the FARSCOPE Centre for Doctoral Training at Bristol Robotics Laboratory. It includes:

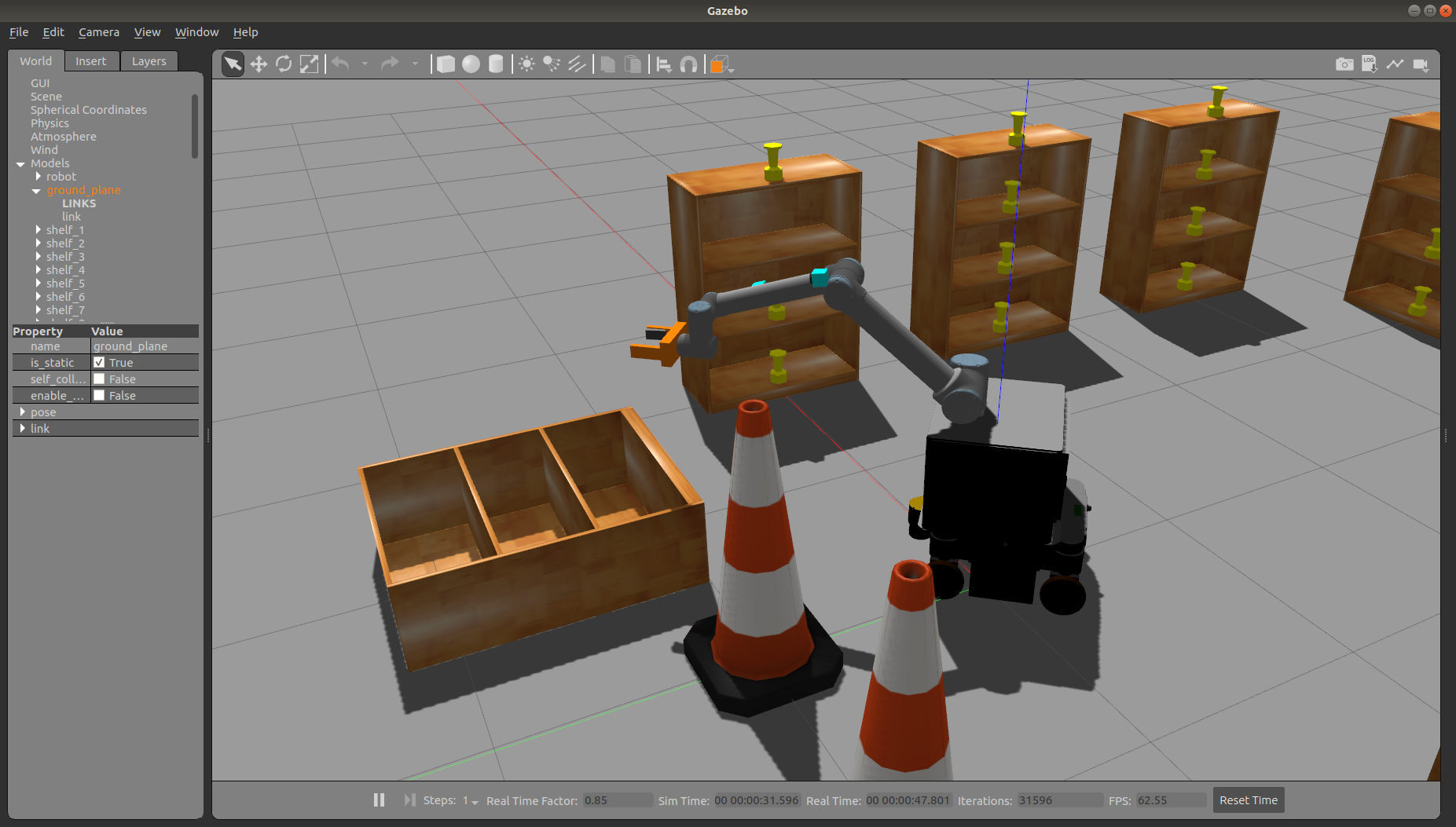

- a simulated environment with targets to be picked from shelves

- a model of FARSCOPE's mobile manipulator robot, the MMO-700 from Neobotix, comprising a UR10 arm on an omnidirectional mobile base.

- a simple gripper model

- a simple camera model

- example control scripts and launch files The simulation is implemented in Gazebo.

The package was developed in ROS Melodic using Gazebo 9.0.0 on Ubuntu 18.04. It has also been tested on ROS Noetic. It requires the following packages:

- neo_simulation : simulation of the MMO-700 from Neobotix

- universal_robot : the UR10 support from ROS Industrial

- joint_state_publisher_gui : the GUI for generating fake joint states, needed for visualizing the robot

Note: there are different ROS packages for the UR10 arm depending on what firmware is installed. This simulation does not guarantee compatibility with the real FARSCOPE arms as that has yet to be tested.

Note: this package is relatively simple but has not been tested with other versions of ROS and Gazebo.

- If you don't have it already, install ROS Melodic and set up a workspace using these instructions

- Install the

joint_state_publisher_guiusingsudo apt install ros-melodic-joint-state-publisher-gui. (Replacemelodicwith your distribution if required.) - Clone this package, neo_simulation and universal_robot into the workspace

srcdirectory. - Navigate up to the root directory of your ROS workspace (

cd ..fromsrc) and runcatkin_make. - Run

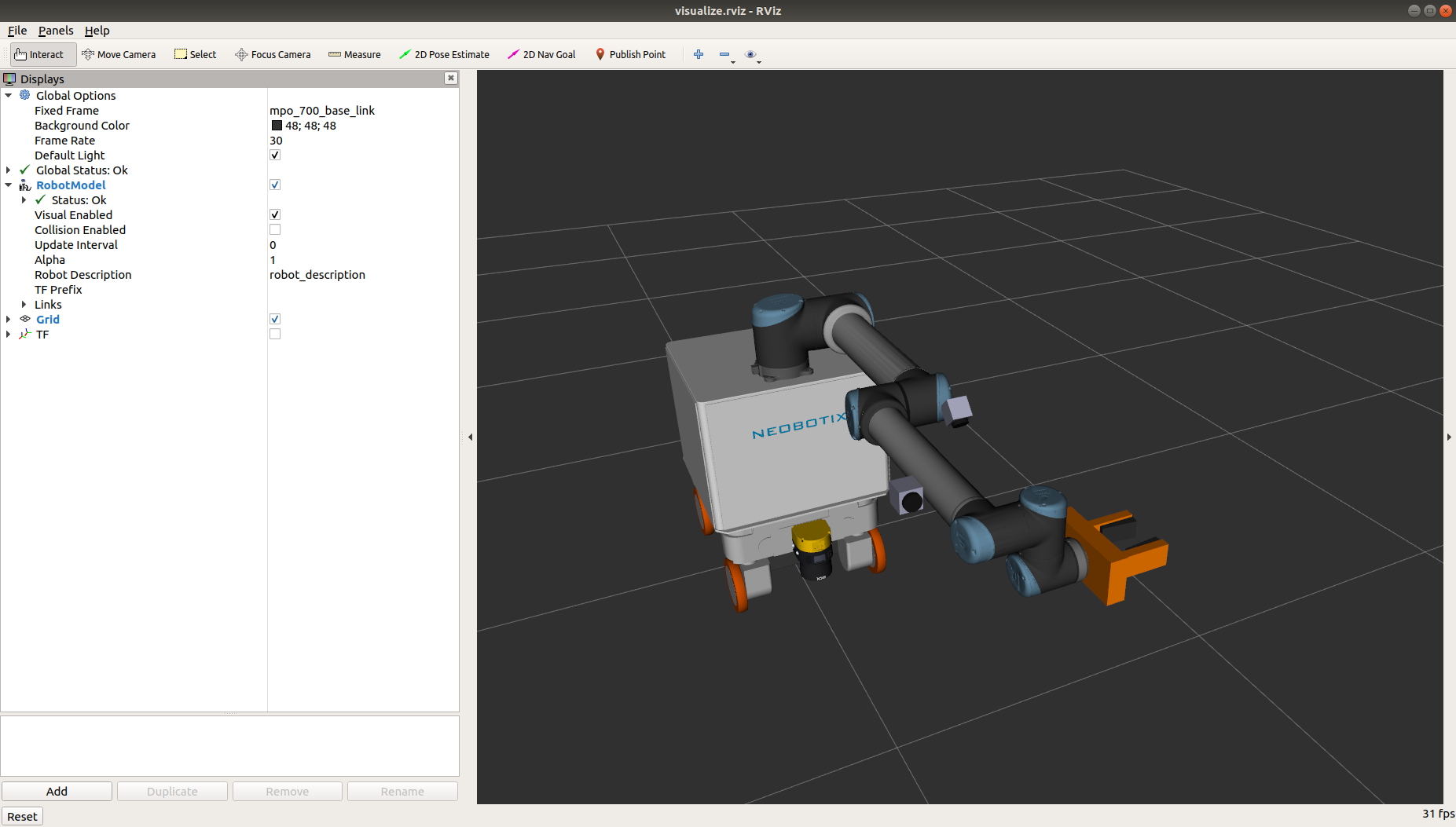

roslaunch farscope_group_project farscope_example_robot_visualize.launch. You should see an RViz visualization of the robot.

- Run

roslaunch farscope_group_project farscope_example_robot_simulate.launch. You should see a Gazebo simulation of the robot picking a target of a shelf and dropping it.

- Run

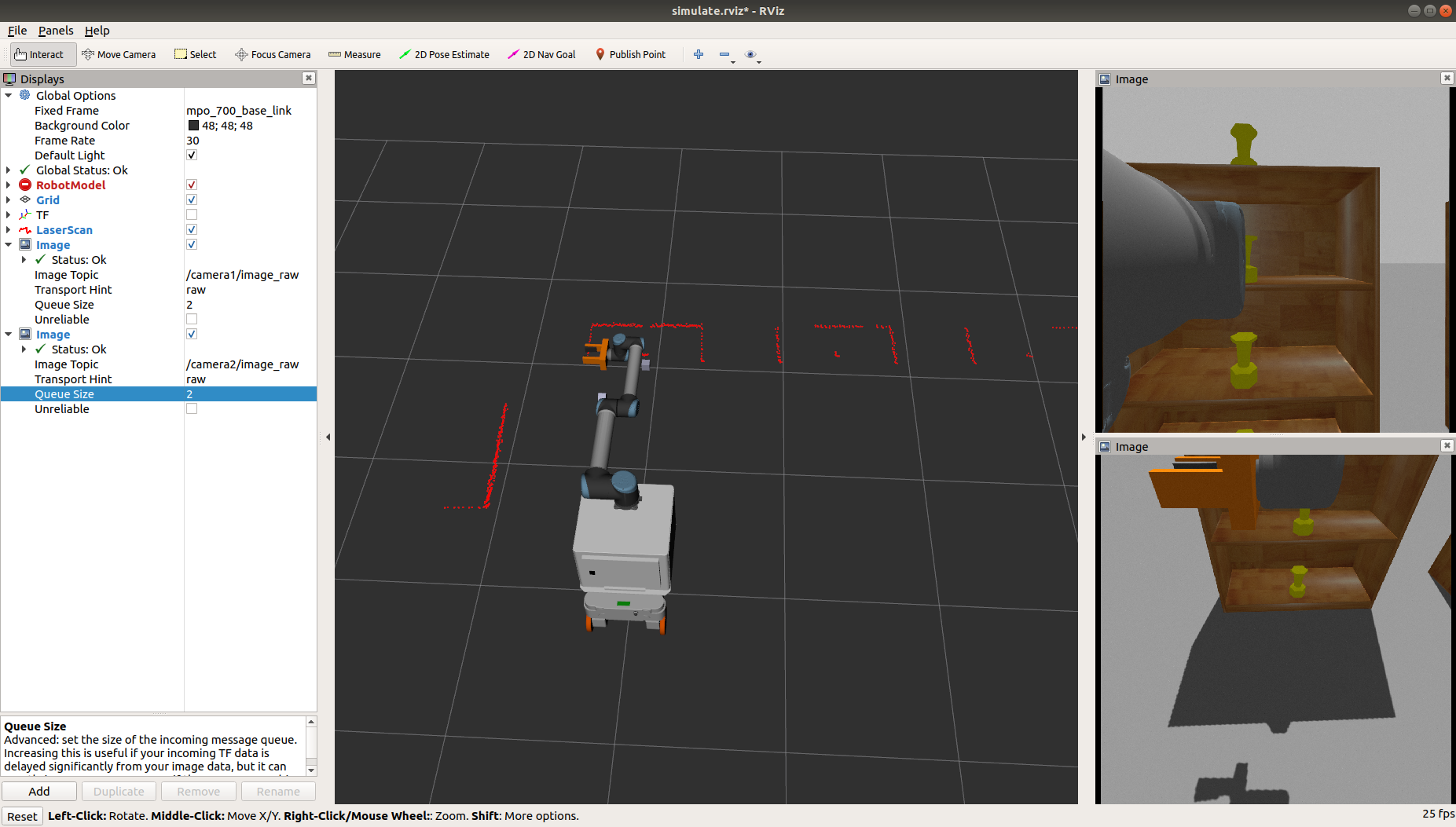

roslaunch farscope_group_project farscope_example_robot_simulate.launch use_gui:=false use_rviz:=true. Now Gazebo will run headless (i.e. no graphics front end) but you can see some of what's happening in RViz, including the LIDAR, the camera views, and the robot pose.

This section describes the ROS interface started by the launch/example_robot/farscope_example_robot_simulate.launch file. Unless otherwise stated, these are all published or subscribed to by the Gazebo node, as a result of plugins enabled in the robot_description URDF.

Also included in that file is the node

scripts/example/test_pickup.pywhich shows examples of how to use the control interface.

(incomplete list)

- lidar_scan : sensor_msgs/LaserScan : output of the front-mounted LIDAR on the mobile base

- camera1/image_raw : sensor_msgs/Image : output of the forearm camera

- camera2/image_raw : sensor_msgs/Image : output of the upper arm camera

- joint_states : sensor_msgs/JointState : states of all robot joints, including arm and gripper

- odom : nav_msgs/Odometry : odometry from the mobile robot base

(incomplete list)

- cmd_vel : geometry_msgs/Twist : command to move robot base

- finger1_controller/command : std_msgs/Float64 : position command for gripper finger 1

- finger2_controller/command : std_msgs/Float64 : position command for gripper finger 2

See here for information on using ROS actions

- arm_controller/follow_joint_trajectory : control_msgs/FollowJointTrajectoryAction : controls the movement of the UR10 arm

- robot_description : the URDF model of the robot

- target_description : URDF model of an individual pick-up target

- scenario : data on the locations of the targets in the world (see

scenario_all.yamlfor an example of the internal format)

The robot is represented in Unified Robot Description Format (URDF), generated using Xacro XML macros for flexibility. The URDF includes <gazebo> tags to encode robot actuation and sensing. You are provided with a fully implemented model of a suitable robot, including a simple two finger gripper, two cameras, and a scanning LIDAR sensor on a mobile base. Your options for customizing the robot include:

- Using the integrated example robot provided in

models/example_robot/farscope_example_robot.urdf.xacroas is - Making your own modified version of

models/example_robot/farscope_example_robot.urdf.xacroto move, add or remove cameras - Replacing the simple gripper with something of your own design including custom CAD (see URDF tutorials) and actuation (warning: custom actuation is difficult and requires learning ros_control)

- Making a complete new robot from scratch (not recommended: lots of ROS detail to learn)

TO DO

The goal of the group project is to experience integration of a robotic system, not to perform research-grade innovation in any individual subsystem. Therefore the challenge has been subtly modified to simplify some elements. In particular, the target object has been made easy to grasp, with a handy lip at the top to avoid the need for friction gripping. Also, it has been made easy to detect, with a contrasting colour and symmetrical appearance.

Physics simulation is hard. Even with Gazebo, it would take considerable tuning to represent realistic grasping, contact and friction. Be ready for some odd behaviour:

- The robot gently rotates even while it is commanded to stay still

- If you drive the robot into a shelf, the robot will probably flip itself over, but the shelf will be undamaged

- A target may spontaneously jump from your grasp

- A target may mysteriously cling to you when released These are things you will just have to work around in the pursuit of your project.